Unit 1: An Introduction to Diffusion Models

Welcome to Unit 1 of the Hugging Face Diffusion Models Course! In this unit, you will learn the basics of how diffusion models work and how to create your own using the 🤗 Diffusers library.

Start this Unit :rocket:

Here are the steps for this unit:

- Make sure you’ve signed up for this course so that you can be notified when new material is released.

- Read through the introductory material below as well as any of the additional resources that sound interesting.

- Check out the Introduction to Diffusers notebook below to put theory into practice with the 🤗 Diffusers library.

- Train and share your own diffusion model using the notebook or the linked training script.

- (Optional) Dive deeper with the Diffusion Models from Scratch notebook if you’re interested in seeing a minimal from-scratch implementation and exploring the different design decisions involved.

- (Optional) Check out this video for an informal run-through the material for this unit.

:loudspeaker: Don’t forget to join the Discord, where you can discuss the material and share what you’ve made in the #diffusion-models-class channel.

What Are Diffusion Models?

Diffusion models are a relatively recent addition to a group of algorithms known as ‘generative models’. The goal of generative modeling is to learn to generate data, such as images or audio, given a number of training examples. A good generative model will create a diverse set of outputs that resemble the training data without being exact copies. How do diffusion models achieve this? Let’s focus on the image generation case for illustrative purposes.

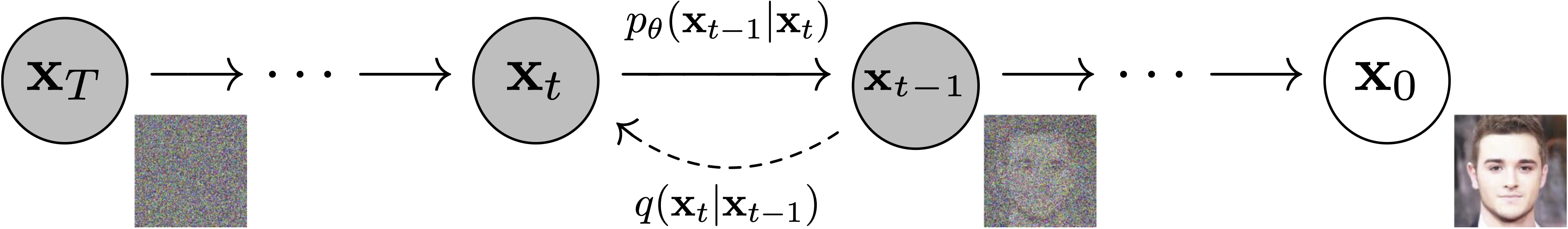

Figure from DDPM paper (https://arxiv.org/abs/2006.11239).

The secret to diffusion models’ success is the iterative nature of the diffusion process. Generation begins with random noise, but this is gradually refined over a number of steps until an output image emerges. At each step, the model estimates how we could go from the current input to a completely denoised version. However, since we only make a small change at every step, any errors in this estimate at the early stages (where predicting the final output is extremely difficult) can be corrected in later updates.

Training the model is relatively straightforward compared to some other types of generative model. We repeatedly 1) Load in some images from the training data 2) Add noise, in different amounts. Remember, we want the model to do a good job estimating how to ‘fix’ (denoise) both extremely noisy images and images that are close to perfect. 3) Feed the noisy versions of the inputs into the model 4) Evaluate how well the model does at denoising these inputs 5) Use this information to update the model weights

To generate new images with a trained model, we begin with a completely random input and repeatedly feed it through the model, updating it each time by a small amount based on the model prediction. As we’ll see, there are a number of sampling methods that try to streamline this process so that we can generate good images with as few steps as possible.

We will show each of these steps in detail in the hands-on notebooks here in unit 1. In unit 2, we will look at how this process can be modified to add additional control over the model outputs through extra conditioning (such as a class label) or with techniques such as guidance. And units 3 and 4 will explore an extremely powerful diffusion model called Stable Diffusion, which can generate images given text descriptions.

Hands-On Notebooks

At this point, you know enough to get started with the accompanying notebooks! The two notebooks here come at the same idea in different ways.

| Chapter | Colab | Kaggle | Gradient | Studio Lab |

|---|---|---|---|---|

| Introduction to Diffusers |  |  | ||

| Diffusion Models from Scratch |  |  |

In Introduction to Diffusers, we show the different steps described above using building blocks from the diffusers library. You’ll quickly see how to create, train and sample your own diffusion models on whatever data you choose. By the end of the notebook, you’ll be able to read and modify the example training script to train diffusion models and share them with the world! This notebook also introduces the main exercise associated with this unit, where we will collectively attempt to figure out good ‘training recipes’ for diffusion models at different scales - see the next section for more info.

In Diffusion Models from Scratch, we show those same steps (adding noise to data, creating a model, training and sampling) but implemented from scratch in PyTorch as simply as possible. Then we compare this ‘toy example’ with the diffusers version, noting how the two differ and where improvements have been made. The goal here is to gain familiarity with the different components and the design decisions that go into them so that when you look at a new implementation you can quickly identify the key ideas.

Project Time

Now that you’ve got the basics down, have a go at training one or more diffusion models! Some suggestions are included at the end of the Introduction to Diffusers notebook. Make sure to share your results, training recipes and findings with the community so that we can collectively figure out the best ways to train these models.

Some Additional Resources

The Annotated Diffusion Model is a very in-depth walk-through of the code and theory behind DDPMs with maths and code showing all the different components. It also links to a number of papers for further reading.

Hugging Face documentation on Unconditional Image-Generation for some examples of how to train diffusion models using the official training example script, including code showing how to create your own dataset.

AI Coffee Break video on Diffusion Models: https://www.youtube.com/watch?v=344w5h24-h8

Yannic Kilcher Video on DDPMs: https://www.youtube.com/watch?v=W-O7AZNzbzQ

Found more great resources? Let us know and we’ll add them to this list.