sayakpaul/diffusion-sdxl-orpo

- Prompt

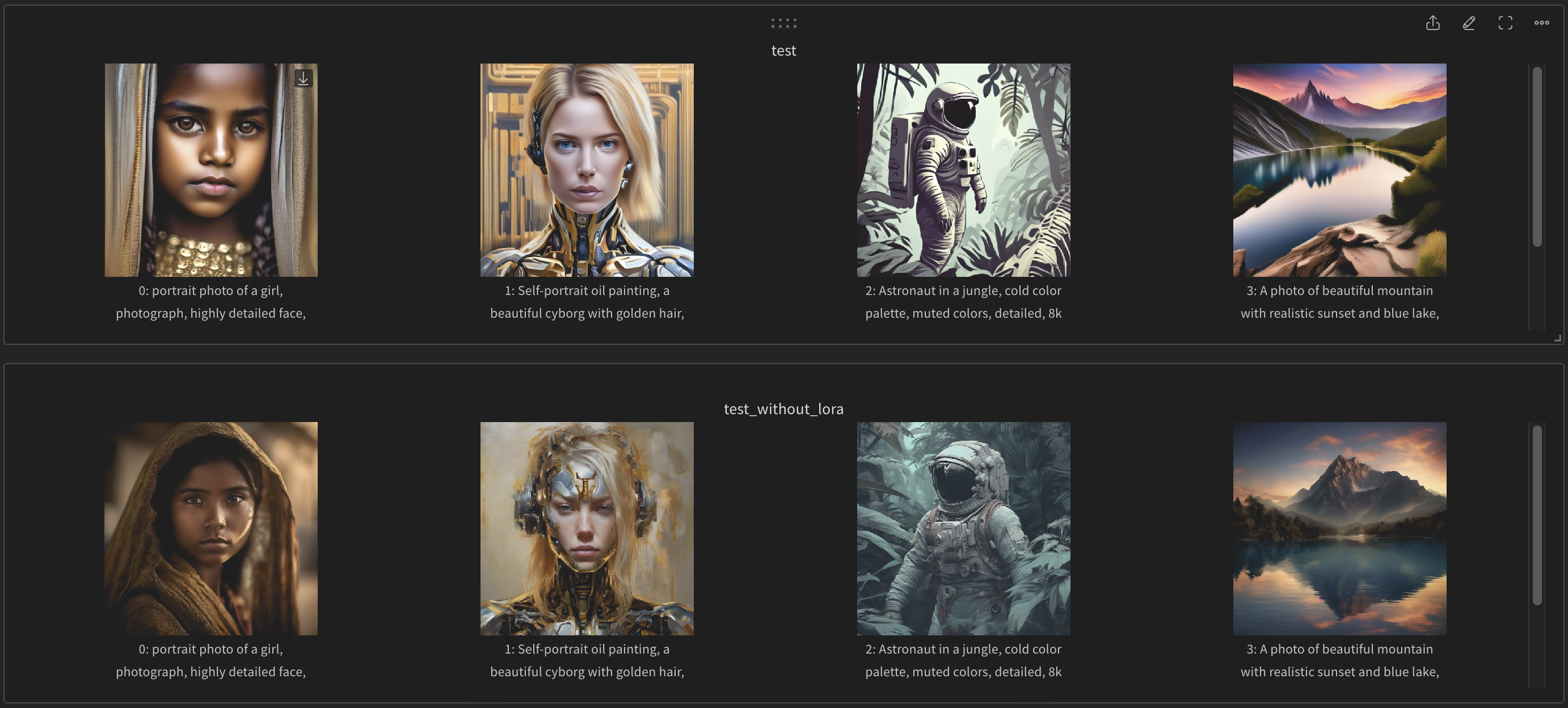

- portrait photo of a girl, photograph, highly detailed face, depth of field, moody light, golden hour, style by Dan Winters, Russell James, Steve McCurry, centered, extremely detailed, Nikon D850, award winning photography

- Prompt

- Self-portrait oil painting, a beautiful cyborg with golden hair, 8k

- Prompt

- Astronaut in a jungle, cold color palette, muted colors, detailed, 8k

- Prompt

- A photo of beautiful mountain with realistic sunset and blue lake, highly detailed, masterpiece

This is an experimental checkpoint and solely exists to validate if ORPO is possible on a diffusion model.

Model description

These are the LoRA weights for Diffusion ORPO. Diffusion ORPO is an effort to align a text-conditioned diffusion model on preference data without having to use a reference model. ORPO was originally proposed in [1].

Training was conducted using the script train_diffusion_orpo_sdxl_lora.py proposed in

this PR.

Full training command used:

accelerate launch train_diffusion_orpo_sdxl_lora.py \

--pretrained_model_name_or_path=stabilityai/stable-diffusion-xl-base-1.0 \

--pretrained_vae_model_name_or_path=madebyollin/sdxl-vae-fp16-fix \

--output_dir="diffusion-sdxl-orpo" \

--mixed_precision="fp16" \

--dataset_name=kashif/pickascore \

--train_batch_size=8 \

--gradient_accumulation_steps=2 \

--gradient_checkpointing \

--use_8bit_adam \

--rank=8 \

--learning_rate=1e-5 \

--report_to="wandb" \

--lr_scheduler="constant" \

--lr_warmup_steps=0 \

--max_train_steps=2000 \

--checkpointing_steps=500 \

--run_validation --validation_steps=50 \

--seed="0" \

--report_to="wandb" \

--push_to_hub

Here's the corresponding run page on WandB. It gives a side-by-side comparison of the results with and without the Diffusion ORPO LoRA parameters:

Training details

It was trained on a subset of yuvalkirstain/pickapic_v2

-- kashif/pickascore.

How to use

Make sure you have the latest versions of the libraries installed:

pip install -U diffusers accelerate transformers peft

And then run:

from diffusers import DiffusionPipeline

import torch

pipe_id = "stabilityai/stable-diffusion-xl-base-1.0"

pipe = DiffusionPipeline.from_pretrained(pipe_id, torch_dtype=torch.float16).to("cuda")

pipe.load_lora_weights("sayakpaul/diffusion-sdxl-orpo")

image = pipe("A high-quality photo of a spaceship that looks like the head of a horse.", num_inference_steps=30).images[0]

image

Refer to this guide to know more about LoRA

inference in diffusers.

Citations

[1] ORPO: Monolithic Preference Optimization without Reference Model; Jiwoo Hong, Noah Lee, James Thorne; https://arxiv.org/abs/2403.07691.

- Downloads last month

- 450